ZFS Deduplication with NTFS

ZFS deduplication was recently integrated into build 128 of OpenSolaris, and while others have tested it out with normal file operations, I was curious to see how effective it would be with zvol-backed NTFS volumes. Due to the structure of NTFS I suspected that it would work well, and the results confirmed that.

NTFS allocates space in fixed sizes, called clusters. The default cluster size for NTFS volumes under 16 TB is 4K, but this can be explicitly set to different values when the volume is created. For this test I stuck with the default 4K cluster size and matched the zvol block size to the cluster size to maximize the effectiveness of the deduplication. In reality, for this test the zvol block size most likely had a negligible effect, but for normal workloads it could be considerable.

The OpenSolaris system was prepared by installing OpenSolaris build 127, installing the COMSTAR iSCSI Target, and then BFU‘ing the system to build 128.

The zpool was created with both dedup and compression enabled:

# zpool create tank c4t1d0 # zfs set dedup=on tank # zfs set compression=on tank # zpool list tank NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT tank 19.9G 148K 19.9G 0% 1.00x ONLINE -

Next, the zvol block devices were created. Note that the volblocksize option was explicitly set to 4K:

# zfs create tank/zvols # zfs create -V 4G -o volblocksize=4K tank/zvols/vol1 # zfs create -V 4G -o volblocksize=4K tank/zvols/vol2 # zfs list -r tank NAME USED AVAIL REFER MOUNTPOINT tank 8.00G 11.6G 23K /tank tank/zvols 8.00G 11.6G 21K /tank/zvols tank/zvols/vol1 4G 15.6G 20K - tank/zvols/vol2 4G 15.6G 20K -

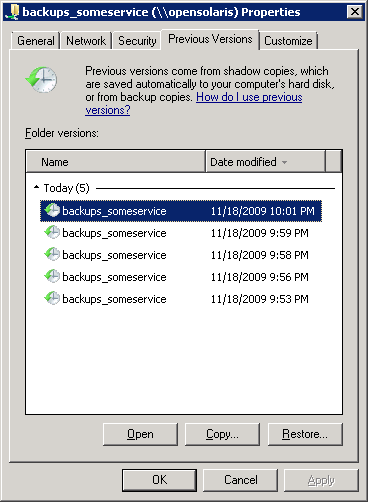

After the zvols were created, they were shared with the COMSTAR iSCSI Target and then set up and formated as NTFS from Windows. With only 4 MB of data on the volumes, the dedup ratio shot way up.

# zpool list tank NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT tank 19.9G 3.88M 19.9G 0% 121.97x ONLINE -

The NTFS volumes were configured in Windows as disks D: and E:. I started off by copying a 10 MB file and then a 134 MB file to D:. The 10 MB file was used to offset the larger file from the start of the disk so that it wouldn’t be in the same location on both volumes. As expected, the dedup ratio dropped down towards 1x as there was only a single copy of the files:

# zpool list tank NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT tank 19.9G 133M 19.7G 0% 1.39x ONLINE -

The 134 MB file was then copied to E:, and immediately the dedup ratio jumped up. So far, so good: dedup works across multiple NTFS volumes:

# zpool list tank NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT tank 19.9G 173M 19.7G 0% 2.26x ONLINE -

A second copy of the 134 MB file was copied to E: to test dedup between files on the same NTFS volume. As expected, the dedup ratio jumped back up to around 3x:

# zpool list tank NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT tank 19.9G 184M 19.7G 0% 3.19x ONLINE -

Though simple, these tests showed that ZFS deduplication performed well, and it conserved disk space within a single NTFS volume and also across multiple volumes in the same ZFS pool. The dedup ratios were even a bit higher than expected which suggests that quite a bit of the NTFS metadata, at least initially, was deduplicated.